|

| source |

The truth is, learning software these days is pretty easy (and if it's not then the software's probably no good). User interfaces are ever-more intuitive, and there's so much documentation and so many forums ("fora" for the prescriptivists, but do you say "musea"? Nobody does that) for asking questions. What's hard is figuring out how to implement an appropriate method for answering a research question. Once you know that, everything else falls into place. Well, mostly.

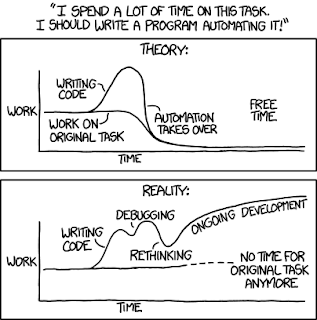

In the beginning, it might take a long time to work out a basic operation. (I remember when ArcMap 10 first came out the buffer operation didn't work). And just because you've generated some output doesn't mean that it worked. There are lots of pitfalls along the way, and it takes time to learn what these are (datums are important, pay attention to units, file formats, is the origin in the upper left or the center of the pixel, capitalization for crying out loud). Simple problems can be difficult to solve if error messages don't tell you how to fix them. I remember I learned a lot as a PhD student just watching other students troubleshoot problems. After a while you'll establish a relationship with the data and software you tend to use, and you'll even learn to anticipate issues before they arise.

There's a big psychological component to learning something new, and I think it's mostly about trusting that there is a solution to the problem and that you can find it. A professor of mine once told me that if you start thinking the computer might be broken, you should walk away for a bit. I guess one of the benefits of spending so many years on this stuff is that I need fewer walks when I'm working on something difficult. Neuroplasiticity for the win!

No comments:

Post a Comment